Author: Julia Jurkowska

Source localization and signal reconstruction - case study for oddball data#

Introduction#

In this tutorial, we will learn how to localize sources from EEG data and reconstruct signals at those sources using MVPURE_py, an extension to MNE-Python. Source localization allows us to move beyond sensor-level analysis to estimate where in the brain the measured activity originates. Once sources are identified, we can reconstruct time series from vertices of interest for further analysis.

We will cover the following steps:

Reading all necessary data for the

sample_subject. You can download this dataset here.Computing data and noise covariance (R and N, respectively).

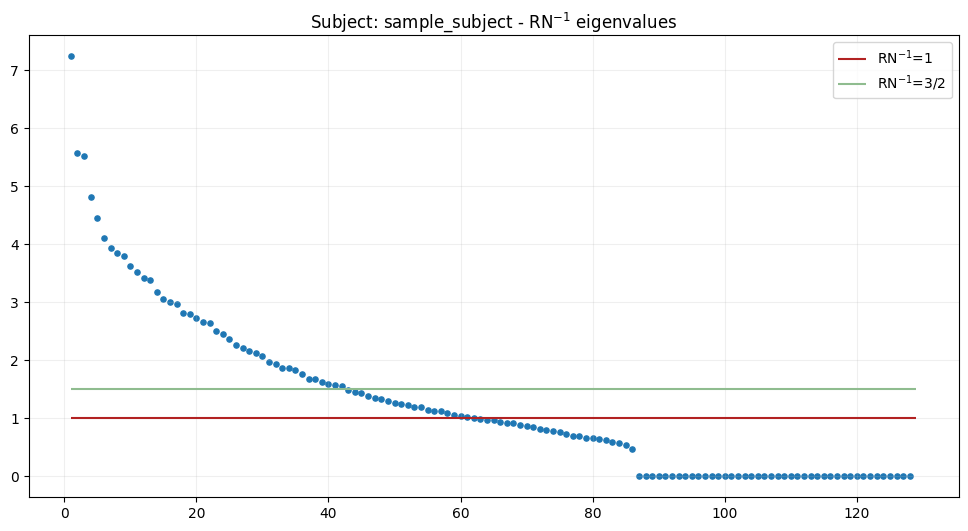

Analysis of \(RN^{-1}\) eigenvalues to guide the number of sources to localize and select an appropriate optimization parameter.

Localizing the specified number of sources.

Reconstructing source signals for vertices of interest and plotting the results.

All steps will be repeated for two time frames: “sensory” (50-200 ms after stimuli) and “cognitive” (350-600 ms after stimuli).

By the end of this tutorial, you will understand the basic workflow of source localization and signal reconstruction using the MVPURE-py package.

[1]:

import mne

import os

mne.viz.set_3d_backend('pyvistaqt')

from mvpure_py import localizer, beamformer, viz, utils

Using pyvistaqt 3d backend.

We will use data the sample_subject dataset provided on Figshare. If you wish to start from the beginning, please complete tutorial Preprocessing data from oddball paradigm first.

[2]:

subject = "sample_subject"

subjects_dir = "subjects"

mne.set_config('SUBJECTS_DIR', subjects_dir)

# Reading mne.Epochs

epoched = mne.read_epochs(os.path.join(subjects_dir, subject, "_eeg", "_pre", f"{subject}_oddball-epo.fif"))

forward_path = os.path.join(subjects_dir, subject, "forward", f"{subject}_ico4-fwd.fif")

trans_path = os.path.join(subjects_dir, subject, "_eeg", "trans", f"{subject}-fit_trans.fif")

# We will be using only data for 'target' stimuli

target = epoched['target']

sel_epoched = target.copy()

sel_epoched = sel_epoched.set_eeg_reference('average', projection=True)

sel_epoched.apply_proj()

sel_evoked = sel_epoched.average()

Reading /Users/julia/Downloads/sample_subject/_eeg/_pre/sample_subject_oddball-epo.fif ...

Found the data of interest:

t = -199.22 ... 800.78 ms

0 CTF compensation matrices available

Not setting metadata

621 matching events found

No baseline correction applied

0 projection items activated

EEG channel type selected for re-referencing

Adding average EEG reference projection.

1 projection items deactivated

Average reference projection was added, but has not been applied yet. Use the apply_proj method to apply it.

Created an SSP operator (subspace dimension = 1)

1 projection items activated

SSP projectors applied...

To perform source localization, we need a forward model that links activity at source locations to the sensors (in this case EEG channels). Here, we load the forward solution and convert it to a fixed-orientation representation.

[3]:

# Reading mne.Forward

fwd_vector = mne.read_forward_solution(forward_path)

# Using fixed orientation in forward solution

fwd = mne.convert_forward_solution(

fwd_vector,

surf_ori=True,

force_fixed=True,

use_cps=True

)

# Leadfield matrix

leadfield = fwd["sol"]["data"]

# Source positions extracted from forward model

src = fwd["src"]

Reading forward solution from /Users/julia/Downloads/sample_subject/forward/sample_subject_ico4-fwd.fif...

Reading a source space...

[done]

Reading a source space...

[done]

2 source spaces read

Desired named matrix (kind = 3523 (FIFF_MNE_FORWARD_SOLUTION_GRAD)) not available

Read EEG forward solution (5124 sources, 128 channels, free orientations)

Source spaces transformed to the forward solution coordinate frame

No patch info available. The standard source space normals will be employed in the rotation to the local surface coordinates....

Changing to fixed-orientation forward solution with surface-based source orientations...

[done]

“Sensory” processing#

We will start with analysing processes in “sensory” time window.

In an oddball paradigm, participants are presented with a sequence of frequent (standard) and infrequent (target) stimuli. The early neural responses to these target stimuli reflect sensory processing - the brain’s initial registration of the incoming stimulus before higher-level cognitive mechanisms are engaged. We assume that sensory processing for given oddball paradigm occurs within the 50-200 ms window after the stimuli. We will therefore compute the data covariance in this time range. To estimate the noise covariance, we use a baseline period -200ms to 0 ms, i.e., the interval before stimulus onset. This baseline is assumed to be free of stimulus-locked activity and provides reference for separating signal from noise.

[4]:

# Compute noise covariance

noise_cov = mne.compute_covariance(

sel_epoched,

tmin=-0.2,

tmax=0,

method="empirical"

)

# Compute data covariance for range corresponding to sensory processing

data_cov_sen = mne.compute_covariance(

sel_epoched,

tmin=0.05,

tmax=0.2,

method="empirical"

)

# Subset signal for given time range

signal_sen = sel_evoked.crop(

tmin=0.05,

tmax=0.2

)

Created an SSP operator (subspace dimension = 1)

Setting small EEG eigenvalues to zero (without PCA)

Reducing data rank from 128 -> 127

Estimating covariance using EMPIRICAL

Done.

Number of samples used : 4056

[done]

Created an SSP operator (subspace dimension = 1)

Setting small EEG eigenvalues to zero (without PCA)

Reducing data rank from 128 -> 127

Estimating covariance using EMPIRICAL

Done.

Number of samples used : 3042

[done]

\(RN^{-1}\) eigenvalues analysis#

Before attempting source localization, we need to decide how many sources to model and with what rank. Our proposition is to analyze the eigenvalues of the product of data covariance matrix \(R\) and the inverse of the noise covariance matrix \(N\). For a detailed theoretical background, see paper.

[5]:

sugg_n_sources, sugg_rank = localizer.suggest_n_sources_and_rank(

R=data_cov_sen.data,

N=noise_cov.data,

show_plot=True,

subject=subject,

s=14

)

Suggested number of sources to localize: 62

Suggested rank is: 42

Localize#

Based on the eigenvalue spectrum above, we will localize 62 sources using rank of 42. We will use function mvpure_py.localizer.localize, which performs the actual source localization. The main parameters are:

subject: the subject ID (here:"sample_subject")subjects_dir: directory containing the subject folders.localizer_to_use: the algorithm variant. Here we choose"mpz_mvp"because it provides the highest spacial resolution. Other possible options include:"mai","mpz", and"mai_mvp". For details, see paper or the function documentation.n_sources_to_localize: number of sources to localize. We will use the suggested number of sources from \(RN^{-1}\) analysis.R: data covariance matrixN: noise covariance matrixforward: themne.Forwardobject for this subjectr: optimization rank parameter. We use the suggested value from the eigenvalues analysis, but it can be any integer smaller thannumber_of_sources_to_localize.

[6]:

locs_sen = localizer.localize(

subject=subject,

subjects_dir=subjects_dir,

localizer_to_use=["mpz_mvp"],

n_sources_to_localize=sugg_n_sources,

R=data_cov_sen.data,

N=noise_cov.data,

forward=fwd,

r=sugg_rank

)

Calculating activity index for localizer: mpz_mvp

iter 1/62: 100%|██████████| 5124/5124 [00:00<00:00, 423647.03it/s]

iter 2/62: 100%|██████████| 5124/5124 [00:01<00:00, 4461.26it/s]

iter 3/62: 100%|██████████| 5124/5124 [00:00<00:00, 20542.92it/s]

iter 4/62: 100%|██████████| 5124/5124 [00:00<00:00, 15067.32it/s]

iter 5/62: 100%|██████████| 5124/5124 [00:00<00:00, 9346.17it/s]

iter 6/62: 100%|██████████| 5124/5124 [00:00<00:00, 17252.65it/s]

iter 7/62: 100%|██████████| 5124/5124 [00:00<00:00, 17195.34it/s]

iter 8/62: 100%|██████████| 5124/5124 [00:00<00:00, 17197.16it/s]

iter 9/62: 100%|██████████| 5124/5124 [00:00<00:00, 17262.65it/s]

iter 10/62: 100%|██████████| 5124/5124 [00:00<00:00, 17166.69it/s]

iter 11/62: 100%|██████████| 5124/5124 [00:00<00:00, 17104.62it/s]

iter 12/62: 100%|██████████| 5124/5124 [00:00<00:00, 17166.92it/s]

iter 13/62: 100%|██████████| 5124/5124 [00:00<00:00, 16736.83it/s]

iter 14/62: 100%|██████████| 5124/5124 [00:00<00:00, 16414.33it/s]

iter 15/62: 100%|██████████| 5124/5124 [00:00<00:00, 16632.03it/s]

iter 16/62: 100%|██████████| 5124/5124 [00:00<00:00, 16591.77it/s]

iter 17/62: 100%|██████████| 5124/5124 [00:00<00:00, 15826.14it/s]

iter 18/62: 100%|██████████| 5124/5124 [00:00<00:00, 15567.52it/s]

iter 19/62: 100%|██████████| 5124/5124 [00:00<00:00, 16543.60it/s]

iter 20/62: 100%|██████████| 5124/5124 [00:00<00:00, 16031.49it/s]

iter 21/62: 100%|██████████| 5124/5124 [00:00<00:00, 13900.64it/s]

iter 22/62: 100%|██████████| 5124/5124 [00:00<00:00, 15342.41it/s]

iter 23/62: 100%|██████████| 5124/5124 [00:00<00:00, 14940.08it/s]

iter 24/62: 100%|██████████| 5124/5124 [00:00<00:00, 14864.54it/s]

iter 25/62: 100%|██████████| 5124/5124 [00:00<00:00, 14544.34it/s]

iter 26/62: 100%|██████████| 5124/5124 [00:00<00:00, 14058.61it/s]

iter 27/62: 100%|██████████| 5124/5124 [00:00<00:00, 14471.83it/s]

iter 28/62: 100%|██████████| 5124/5124 [00:00<00:00, 12013.64it/s]

iter 29/62: 100%|██████████| 5124/5124 [00:00<00:00, 13561.26it/s]

iter 30/62: 100%|██████████| 5124/5124 [00:00<00:00, 11235.68it/s]

iter 31/62: 100%|██████████| 5124/5124 [00:00<00:00, 11895.04it/s]

iter 32/62: 100%|██████████| 5124/5124 [00:00<00:00, 13627.63it/s]

iter 33/62: 100%|██████████| 5124/5124 [00:00<00:00, 12838.50it/s]

iter 34/62: 100%|██████████| 5124/5124 [00:00<00:00, 11983.22it/s]

iter 35/62: 100%|██████████| 5124/5124 [00:00<00:00, 9649.29it/s]

iter 36/62: 100%|██████████| 5124/5124 [00:00<00:00, 12125.74it/s]

iter 37/62: 100%|██████████| 5124/5124 [00:00<00:00, 10649.22it/s]

iter 38/62: 100%|██████████| 5124/5124 [00:00<00:00, 8756.48it/s]

iter 39/62: 100%|██████████| 5124/5124 [00:00<00:00, 11141.04it/s]

iter 40/62: 100%|██████████| 5124/5124 [00:00<00:00, 11042.17it/s]

iter 41/62: 100%|██████████| 5124/5124 [00:00<00:00, 8941.58it/s]

iter 42/62: 100%|██████████| 5124/5124 [00:00<00:00, 10850.30it/s]

iter 43/62: 100%|██████████| 5124/5124 [00:03<00:00, 1637.60it/s]

iter 44/62: 100%|██████████| 5124/5124 [00:03<00:00, 1537.59it/s]

iter 45/62: 100%|██████████| 5124/5124 [00:03<00:00, 1461.97it/s]

iter 46/62: 100%|██████████| 5124/5124 [00:03<00:00, 1399.30it/s]

iter 47/62: 100%|██████████| 5124/5124 [00:03<00:00, 1341.83it/s]

iter 48/62: 100%|██████████| 5124/5124 [00:03<00:00, 1335.81it/s]

iter 49/62: 100%|██████████| 5124/5124 [00:04<00:00, 1248.97it/s]

iter 50/62: 100%|██████████| 5124/5124 [00:04<00:00, 1197.41it/s]

iter 51/62: 100%|██████████| 5124/5124 [00:04<00:00, 1160.37it/s]

iter 52/62: 100%|██████████| 5124/5124 [00:04<00:00, 1145.77it/s]

iter 53/62: 100%|██████████| 5124/5124 [00:04<00:00, 1115.01it/s]

iter 54/62: 100%|██████████| 5124/5124 [00:05<00:00, 949.00it/s]

iter 55/62: 100%|██████████| 5124/5124 [00:05<00:00, 1002.33it/s]

iter 56/62: 100%|██████████| 5124/5124 [00:05<00:00, 998.51it/s]

iter 57/62: 100%|██████████| 5124/5124 [00:05<00:00, 969.23it/s]

iter 58/62: 100%|██████████| 5124/5124 [00:05<00:00, 921.80it/s]

iter 59/62: 100%|██████████| 5124/5124 [00:05<00:00, 858.80it/s]

iter 60/62: 100%|██████████| 5124/5124 [00:05<00:00, 867.44it/s]

iter 61/62: 100%|██████████| 5124/5124 [00:06<00:00, 846.61it/s]

iter 62/62: 100%|██████████| 5124/5124 [00:06<00:00, 814.71it/s]

[Activity Index Result]

Selected indices (index_max): [31, 3557, 795, 1213, 1690, 2506, 2697, 2602, 1225, 83, 1966, 2085, 994, 2850, 2212, 4404, 4304, 4882, 608, 2522, 2325, 1876, 6, 3255, 1860, 3714, 4804, 2690, 84, 371, 2454, 3624, 5108, 1971, 265, 650, 992, 2727, 42, 4698, 329, 2574, 1258, 4002, 3368, 4920, 3, 33, 1405, 2887, 2333, 2771, 4604, 3333, 302, 306, 4422, 2597, 2580, 16, 4266, 3815]

Rank parameter (r): 42

[7]:

# Transform leadfield indices to vertices

lh_vert_sen, lh_idx_sen, rh_vert_sen, rh_idx_sen = utils.transform_leadfield_indices_to_vertices(

lf_idx=locs_sen["sources"],

src=src,

hemi="both",

include_mapping=True

)

locs_sen.add_vertices_info(

lh_vertices=lh_vert_sen,

lh_indices=lh_idx_sen,

rh_vertices=rh_vert_sen,

rh_indices=rh_idx_sen

)

Optionally, we can plot the localized sources on the brain surface:

[8]:

# locs_sen.plot_localized_sources()

Here, the size and color of the markers indicate the order of localization:

large, red foci: sources localized earlier

small, white foci: sources localized later

Reconstruct#

Now that we have localized sources of interest, the next sgtep is to reconstruct their activity. First, we restrict the original forward model to only include the localized sources. This reduces the forward solution to the relevant subspace:|

[9]:

# Subset mne.Forward

new_fwd_sen = utils.subset_forward(

old_fwd=fwd,

localized=locs_sen,

hemi="both"

)

To compute the filters, we will use beamformer.make_filter.

This function works similarly to mne.beamformer.make_lcmv, but with additional parameters specific to MVPURE.

We provide these in a dictionary called mvpure_params:

filter_type: type of the beamformer to use. Options are:MVP_RandMVP_N. In this case, we will useMVP_Ras it is generalization of commonly used LCMV filter.filter_rank: optimization rank parameter. For best performance, we use the same rank as in the localization step.

Note: setting filter_rank="ful" reduces the method to a standard LCMV filter. For theoretical details see paper.

[10]:

# MVPURE filter parameters

mvpure_params = {

'filter_type': 'MVP_R',

'filter_rank': sugg_rank

}

[11]:

# Build beamformer filter (similar to LCMV but with MVPURE options)

filter_sen = beamformer.make_filter(

signal_sen.info,

new_fwd_sen,

data_cov_sen,

reg=0.05,

noise_cov=noise_cov,

pick_ori=None, # not needed with fixed orientation forward

weight_norm=None,

rank=None,

mvpure_params=mvpure_params

)

Computing rank from covariance with rank=None

Using tolerance 4.4e-13 (2.2e-16 eps * 128 dim * 15 max singular value)

Estimated rank (eeg): 86

EEG: rank 86 computed from 128 data channels with 1 projector

Computing rank from covariance with rank=None

Using tolerance 3.6e-13 (2.2e-16 eps * 128 dim * 13 max singular value)

Estimated rank (eeg): 86

EEG: rank 86 computed from 128 data channels with 1 projector

Making MVP_R beamformer with rank {'eeg': 86} (note: MNE-Python rank)

Computing inverse operator with 128 channels.

128 out of 128 channels remain after picking

Selected 128 channels

Whitening the forward solution.

Created an SSP operator (subspace dimension = 1)

Computing rank from covariance with rank={'eeg': 86}

Setting small EEG eigenvalues to zero (without PCA)

Creating the source covariance matrix

Adjusting source covariance matrix.

Computing beamformer filters for 62 sources

MVP_R computation - in progress...

Filter rank: 42

Filter computation complete

[12]:

# Apply filter to cropped evoked response

stc_sen = beamformer.apply_filter(signal_sen, filter_sen)

Then we attach the resulting mne.SourceEstimate to the localized sources object, making it easier to visualize:

[13]:

# Add reconstructed source time course

locs_sen.add_stc(stc_sen)

Finally, let’s plot the localized sources with their reconstructed activity:

[14]:

viz.plot_sources_with_activity(

subject=subject,

stc=stc_sen

)

Using control points [2.91332758e-09 3.43983961e-09 7.89233742e-09]

[14]:

<mne.viz._brain._brain.Brain at 0x145ca1010>

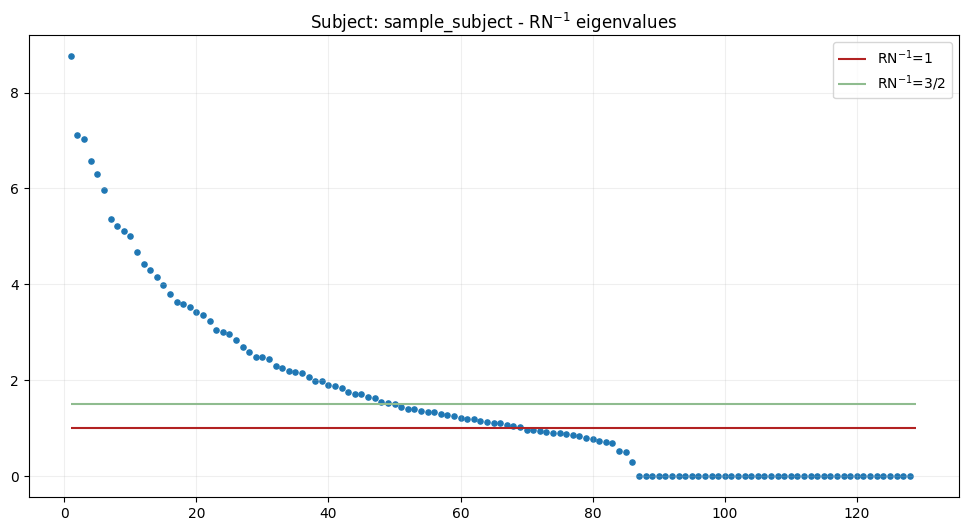

“Cognitive” task#

After examining the early sensory responses, we now turn to the later cognitive stage of processing in the oddball paradigm. In EEG, target stimuli typically evoke a P300 component — a positive deflection peaking around 300–600 ms after stimulus onset. This response is thought to reflect higher-level cognitive processes, such as attention allocation and stimulus evaluation, in contrast to the earlier sensory responses.

For this dataset, we will therefore define the cognitive time window as 350–600 ms. The pipeline remains the same as before:

Compute noise covariance (always from −200 to 0 ms).

Compute data covariance in the cognitive window (350–600 ms).

Subset the evoked signal to this time range.

[15]:

# Compute data covariance for range corresponding to sensory processing

data_cov_task = mne.compute_covariance(

sel_epoched,

tmin=0.35,

tmax=0.6,

method="empirical"

)

# There's no need to compute `noise_covariance` again as it is the same time interval

sel_evoked = sel_epoched.average()

# Subset signal for given time range

signal_task = sel_evoked.crop(

tmin=0.35,

tmax=0.6

)

Created an SSP operator (subspace dimension = 1)

Setting small EEG eigenvalues to zero (without PCA)

Reducing data rank from 128 -> 127

Estimating covariance using EMPIRICAL

Done.

Number of samples used : 5070

[done]

From here, we can repeat the same steps as in the sensory section:

analyze eigenvalues of \(RN^{-1}\),

localize sources,

reconstruct signals with MVPURE filters,

and finally visualize the results.

[16]:

# Suggest number of sources to localize

# and optimization parameter to use for both localization and reconstruction

sugg_n_sources, sugg_rank = localizer.suggest_n_sources_and_rank(

R=data_cov_task.data,

N=noise_cov.data,

show_plot=True,

subject=subject,

s=14

)

Suggested number of sources to localize: 69

Suggested rank is: 50

[17]:

# Localize

locs_task = localizer.localize(

subject=subject,

subjects_dir=subjects_dir,

localizer_to_use=["mpz_mvp"],

n_sources_to_localize=sugg_n_sources,

R=data_cov_task.data,

N=noise_cov.data,

forward=fwd,

r=sugg_rank,

)

Calculating activity index for localizer: mpz_mvp

iter 1/69: 100%|██████████| 5124/5124 [00:00<00:00, 673148.55it/s]

iter 2/69: 100%|██████████| 5124/5124 [00:01<00:00, 3447.51it/s]

iter 3/69: 100%|██████████| 5124/5124 [00:00<00:00, 17790.01it/s]

iter 4/69: 100%|██████████| 5124/5124 [00:00<00:00, 13533.68it/s]

iter 5/69: 100%|██████████| 5124/5124 [00:00<00:00, 6630.55it/s]

iter 6/69: 100%|██████████| 5124/5124 [00:00<00:00, 13777.58it/s]

iter 7/69: 100%|██████████| 5124/5124 [00:00<00:00, 13743.67it/s]

iter 8/69: 100%|██████████| 5124/5124 [00:00<00:00, 13652.64it/s]

iter 9/69: 100%|██████████| 5124/5124 [00:00<00:00, 16777.56it/s]

iter 10/69: 100%|██████████| 5124/5124 [00:00<00:00, 17439.25it/s]

iter 11/69: 100%|██████████| 5124/5124 [00:00<00:00, 17217.11it/s]

iter 12/69: 100%|██████████| 5124/5124 [00:00<00:00, 17288.15it/s]

iter 13/69: 100%|██████████| 5124/5124 [00:00<00:00, 15111.29it/s]

iter 14/69: 100%|██████████| 5124/5124 [00:00<00:00, 15579.35it/s]

iter 15/69: 100%|██████████| 5124/5124 [00:00<00:00, 16393.45it/s]

iter 16/69: 100%|██████████| 5124/5124 [00:00<00:00, 16664.49it/s]

iter 17/69: 100%|██████████| 5124/5124 [00:00<00:00, 15762.28it/s]

iter 18/69: 100%|██████████| 5124/5124 [00:00<00:00, 14986.45it/s]

iter 19/69: 100%|██████████| 5124/5124 [00:00<00:00, 15905.99it/s]

iter 20/69: 100%|██████████| 5124/5124 [00:00<00:00, 12813.01it/s]

iter 21/69: 100%|██████████| 5124/5124 [00:00<00:00, 14890.67it/s]

iter 22/69: 100%|██████████| 5124/5124 [00:00<00:00, 15474.94it/s]

iter 23/69: 100%|██████████| 5124/5124 [00:00<00:00, 13959.68it/s]

iter 24/69: 100%|██████████| 5124/5124 [00:00<00:00, 12913.37it/s]

iter 25/69: 100%|██████████| 5124/5124 [00:00<00:00, 13516.01it/s]

iter 26/69: 100%|██████████| 5124/5124 [00:00<00:00, 14048.63it/s]

iter 27/69: 100%|██████████| 5124/5124 [00:00<00:00, 13181.55it/s]

iter 28/69: 100%|██████████| 5124/5124 [00:00<00:00, 12684.84it/s]

iter 29/69: 100%|██████████| 5124/5124 [00:00<00:00, 13859.17it/s]

iter 30/69: 100%|██████████| 5124/5124 [00:00<00:00, 11456.32it/s]

iter 31/69: 100%|██████████| 5124/5124 [00:00<00:00, 13169.45it/s]

iter 32/69: 100%|██████████| 5124/5124 [00:00<00:00, 13019.41it/s]

iter 33/69: 100%|██████████| 5124/5124 [00:00<00:00, 5513.26it/s]

iter 34/69: 100%|██████████| 5124/5124 [00:00<00:00, 6869.02it/s]

iter 35/69: 100%|██████████| 5124/5124 [00:00<00:00, 8471.36it/s]

iter 36/69: 100%|██████████| 5124/5124 [00:00<00:00, 7948.14it/s]

iter 37/69: 100%|██████████| 5124/5124 [00:00<00:00, 7309.58it/s]

iter 38/69: 100%|██████████| 5124/5124 [00:00<00:00, 8317.20it/s]

iter 39/69: 100%|██████████| 5124/5124 [00:00<00:00, 9949.81it/s]

iter 40/69: 100%|██████████| 5124/5124 [00:01<00:00, 2762.50it/s]

iter 41/69: 100%|██████████| 5124/5124 [00:00<00:00, 5185.08it/s]

iter 42/69: 100%|██████████| 5124/5124 [00:00<00:00, 8541.85it/s]

iter 43/69: 100%|██████████| 5124/5124 [00:00<00:00, 7091.39it/s]

iter 44/69: 100%|██████████| 5124/5124 [00:00<00:00, 7988.27it/s]

iter 45/69: 100%|██████████| 5124/5124 [00:00<00:00, 7147.67it/s]

iter 46/69: 100%|██████████| 5124/5124 [00:00<00:00, 6498.89it/s]

iter 47/69: 100%|██████████| 5124/5124 [00:00<00:00, 5899.57it/s]

iter 48/69: 100%|██████████| 5124/5124 [00:00<00:00, 6188.39it/s]

iter 49/69: 100%|██████████| 5124/5124 [00:00<00:00, 6344.22it/s]

iter 50/69: 100%|██████████| 5124/5124 [00:00<00:00, 5948.13it/s]

iter 51/69: 100%|██████████| 5124/5124 [00:04<00:00, 1078.99it/s]

iter 52/69: 100%|██████████| 5124/5124 [00:04<00:00, 1077.08it/s]

iter 53/69: 100%|██████████| 5124/5124 [00:04<00:00, 1033.61it/s]

iter 54/69: 100%|██████████| 5124/5124 [00:05<00:00, 982.79it/s]

iter 55/69: 100%|██████████| 5124/5124 [00:05<00:00, 938.43it/s]

iter 56/69: 100%|██████████| 5124/5124 [00:05<00:00, 957.84it/s]

iter 57/69: 100%|██████████| 5124/5124 [00:05<00:00, 898.29it/s]

iter 58/69: 100%|██████████| 5124/5124 [00:05<00:00, 879.83it/s]

iter 59/69: 100%|██████████| 5124/5124 [00:06<00:00, 830.73it/s]

iter 60/69: 100%|██████████| 5124/5124 [00:06<00:00, 778.66it/s]

iter 61/69: 100%|██████████| 5124/5124 [00:10<00:00, 494.61it/s]

iter 62/69: 100%|██████████| 5124/5124 [00:11<00:00, 461.76it/s]

iter 63/69: 100%|██████████| 5124/5124 [00:07<00:00, 720.94it/s]

iter 64/69: 100%|██████████| 5124/5124 [00:07<00:00, 649.19it/s]

iter 65/69: 100%|██████████| 5124/5124 [00:08<00:00, 615.75it/s]

iter 66/69: 100%|██████████| 5124/5124 [00:08<00:00, 617.40it/s]

iter 67/69: 100%|██████████| 5124/5124 [00:08<00:00, 638.77it/s]

iter 68/69: 100%|██████████| 5124/5124 [00:09<00:00, 566.07it/s]

iter 69/69: 100%|██████████| 5124/5124 [00:08<00:00, 587.54it/s]

[Activity Index Result]

Selected indices (index_max): [1689, 1291, 1489, 2159, 4405, 5051, 2039, 800, 2020, 3224, 1792, 4998, 821, 1865, 3762, 4165, 2372, 2471, 4817, 495, 1755, 2548, 3334, 1572, 2322, 2008, 4379, 3733, 4426, 2527, 2230, 2362, 4774, 356, 2545, 902, 108, 3720, 211, 481, 64, 2740, 2303, 4641, 148, 170, 1460, 3454, 68, 4850, 1732, 641, 2310, 4678, 764, 669, 1376, 3730, 2856, 4542, 4680, 2932, 3896, 600, 3172, 2564, 2631, 4982, 1342]

Rank parameter (r): 50

[18]:

# Transform leadfield indices to vertices

lh_vert_task, lh_idx_task, rh_vert_task, rh_idx_task = utils.transform_leadfield_indices_to_vertices(

lf_idx=locs_task["sources"],

src=src,

hemi="both",

include_mapping=True

)

locs_task.add_vertices_info(

lh_vertices=lh_vert_task,

lh_indices=lh_idx_task,

rh_vertices=rh_vert_task,

rh_indices=rh_idx_task

)

[19]:

new_fwd_task = utils.subset_forward(

old_fwd=fwd,

localized=locs_task,

hemi="both"

)

[20]:

mcmv_params = {

'filter_rank': sugg_rank,

"filter_type": "MVP_R"

}

filter_task = beamformer.make_filter(

signal_task.info,

new_fwd_task,

data_cov_task,

reg=0.05,

noise_cov=noise_cov,

pick_ori=None, # because scalar forward

weight_norm=None,

rank=None,

mvpure_params=mcmv_params

)

Computing rank from covariance with rank=None

Using tolerance 5.2e-13 (2.2e-16 eps * 128 dim * 18 max singular value)

Estimated rank (eeg): 86

EEG: rank 86 computed from 128 data channels with 1 projector

Computing rank from covariance with rank=None

Using tolerance 3.6e-13 (2.2e-16 eps * 128 dim * 13 max singular value)

Estimated rank (eeg): 86

EEG: rank 86 computed from 128 data channels with 1 projector

Making MVP_R beamformer with rank {'eeg': 86} (note: MNE-Python rank)

Computing inverse operator with 128 channels.

128 out of 128 channels remain after picking

Selected 128 channels

Whitening the forward solution.

Created an SSP operator (subspace dimension = 1)

Computing rank from covariance with rank={'eeg': 86}

Setting small EEG eigenvalues to zero (without PCA)

Creating the source covariance matrix

Adjusting source covariance matrix.

Computing beamformer filters for 69 sources

MVP_R computation - in progress...

Filter rank: 50

Filter computation complete

[21]:

stc_task = beamformer.apply_filter(signal_task, filter_task)

# Add source estimate to mvpure_py.Localized object

locs_task.add_stc(stc_task)

# Plot

viz.plot_sources_with_activity(

subject=subject,

stc=stc_task,

)

Using control points [2.84206626e-09 3.11743662e-09 5.08812233e-09]

[21]:

<mne.viz._brain._brain.Brain at 0x1459b1810>